41 confident learning estimating uncertainty in dataset labels

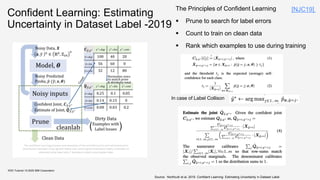

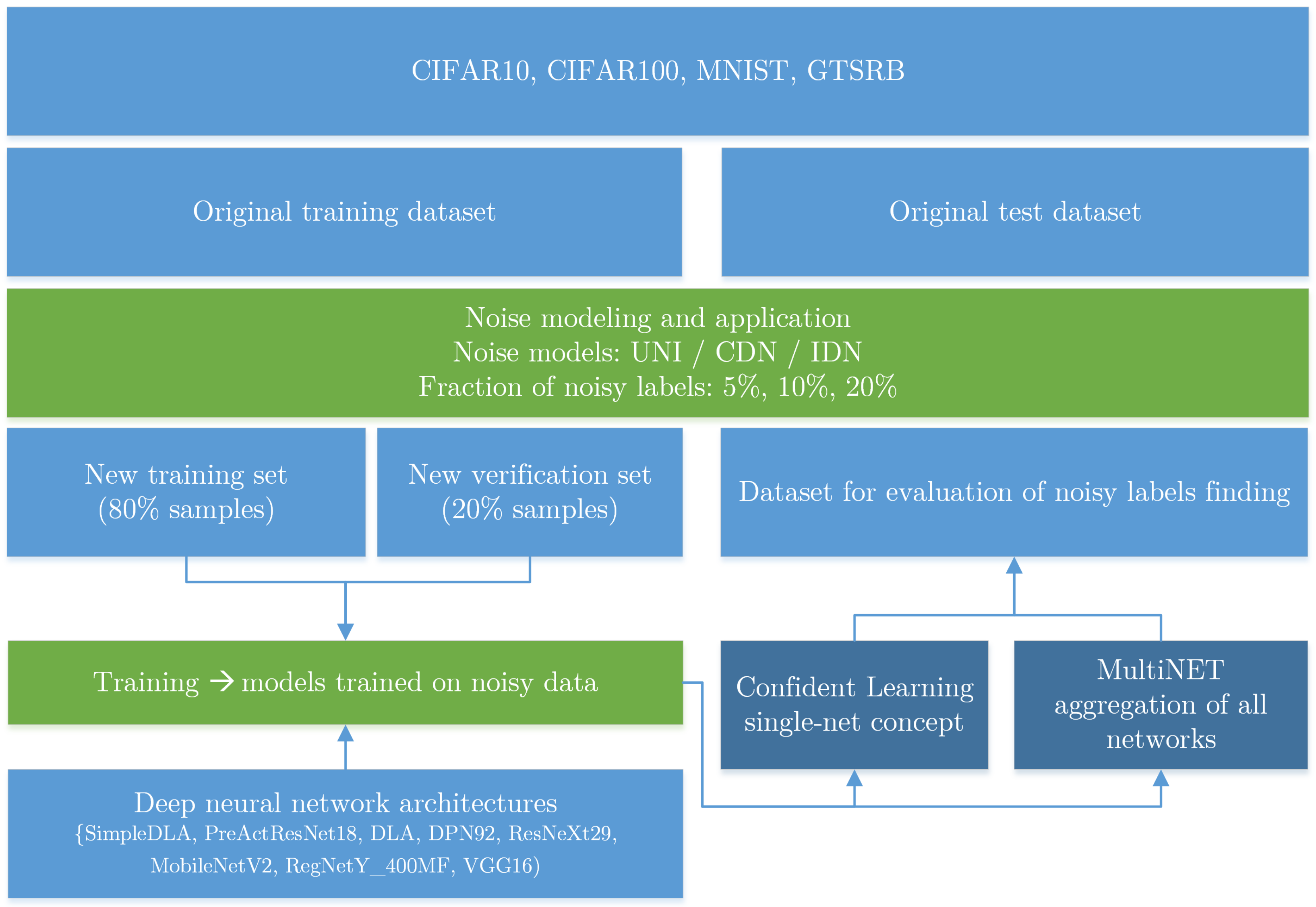

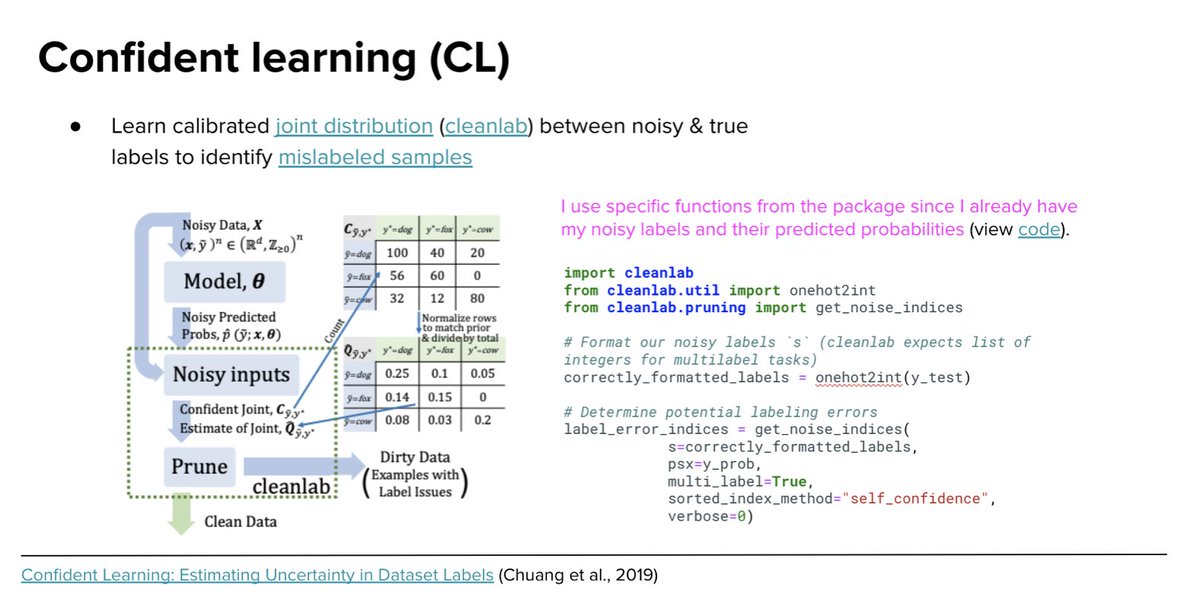

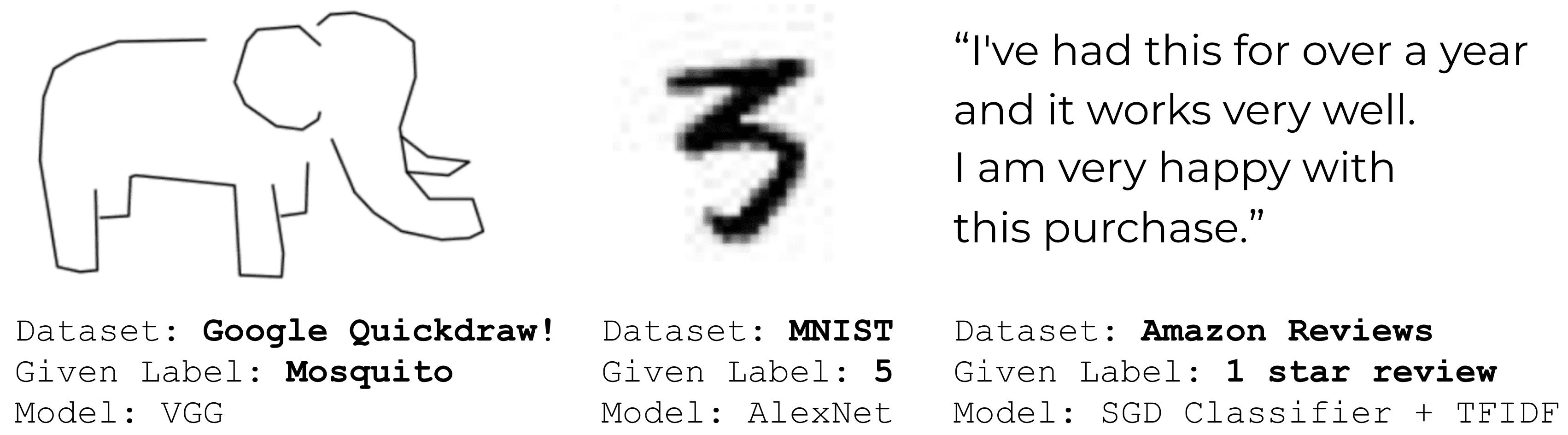

Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to... Confident Learning: Estimating Uncertainty in Dataset Labels | AITopics Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking ...

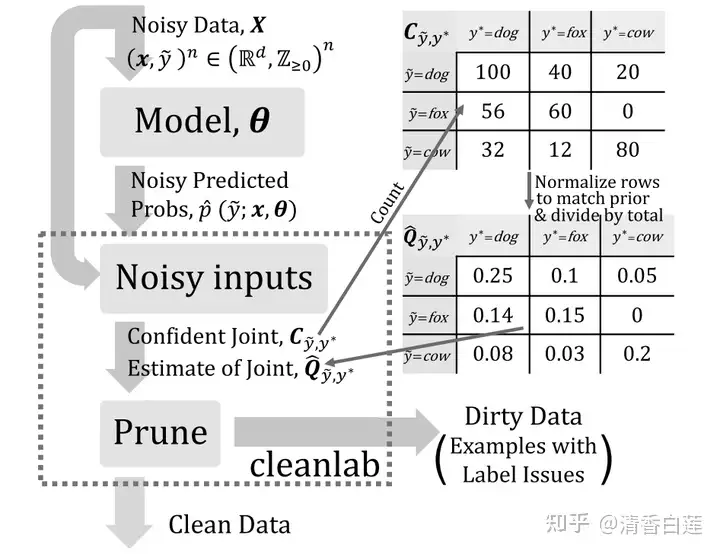

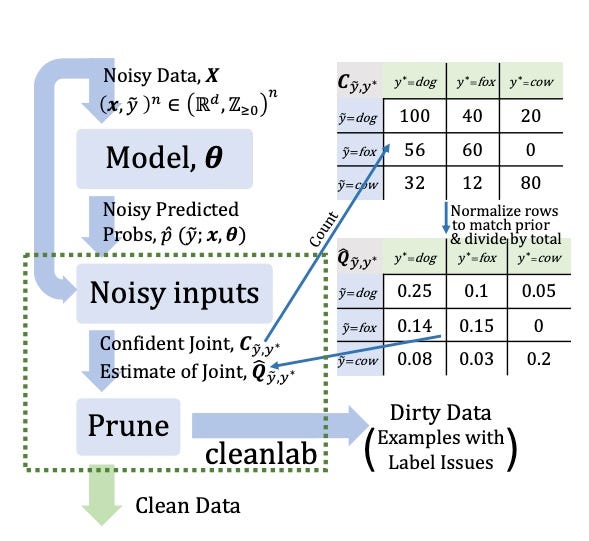

Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

Confident learning estimating uncertainty in dataset labels

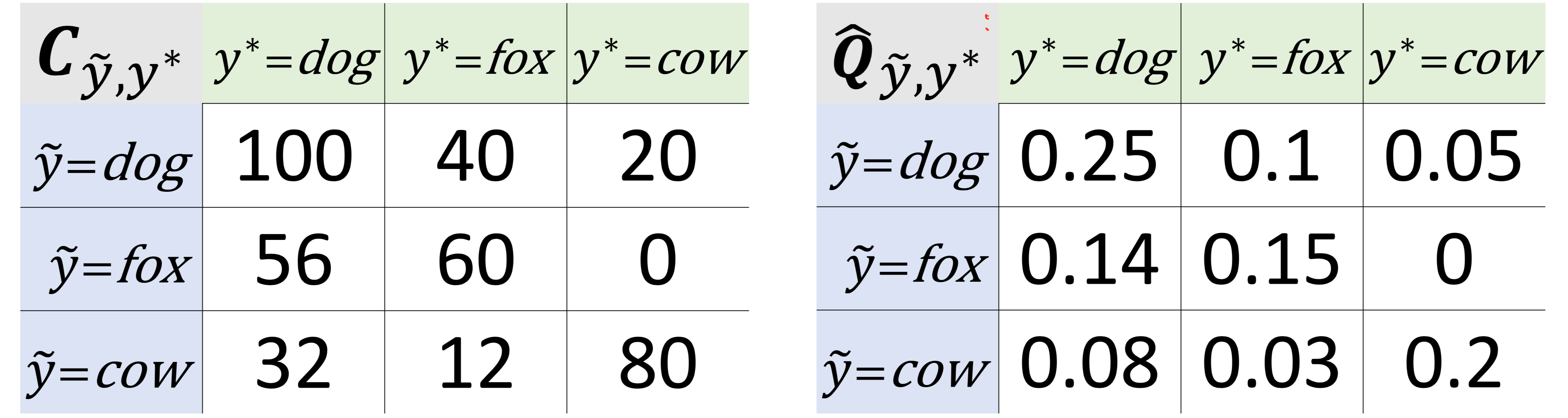

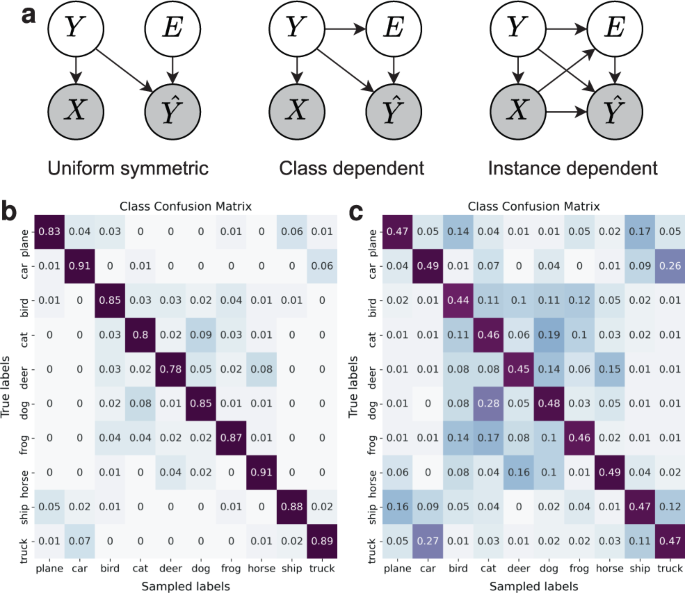

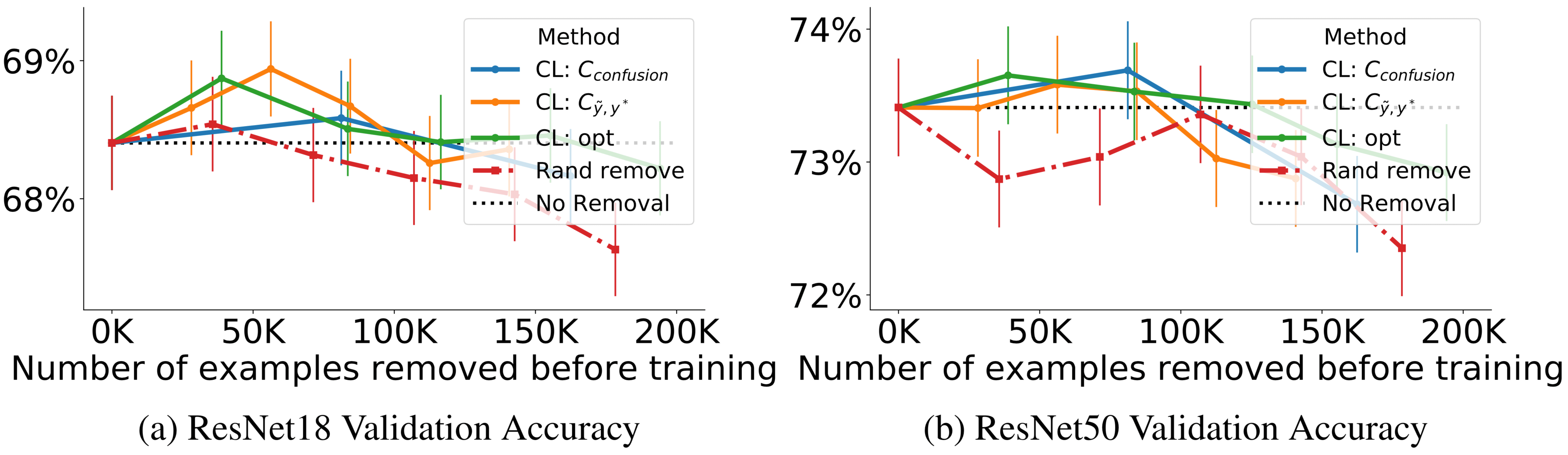

Uncertainty-informed deep learning models enable high-confidence ... Several techniques have been developed to estimate uncertainty from deep learning models. ... the CPTAC dataset, high-confidence predictions from UQ models ... with the synthetic image labels ... Confident Learning: Estimating Uncertainty in Dataset Labels | AITopics Learning exists in the context of data, yet notions of $\textit{confidence}$ typically focus on model predictions, not label quality. Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. Figure 5 from Confident Learning: Estimating Uncertainty in Dataset ... Figure 5: Absolute difference of the true joint Qỹ,y∗ and the joint distribution estimated using confident learning Q̂ỹ,y∗ on CIFAR-10, for 20%, 40%, and 70% label noise, 20%, 40%, and 60% sparsity, for all pairs of classes in the joint distribution of label noise. - "Confident Learning: Estimating Uncertainty in Dataset Labels"

Confident learning estimating uncertainty in dataset labels. An Introduction to Confident Learning: Finding and Learning with Label ... An Introduction to Confident Learning: Finding and Learning with Label Errors in Datasets Curtis Northcutt Mod Justin Stuck • 3 years ago Hi Thanks for the questions. Yes, multi-label is supported, but is alpha (use at your own risk). You can set `multi-label=True` in the `get_noise_indices ()` function and other functions. Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. Whereas numerous studies have developed these ... Confident Learning: Estimating Uncertainty in Dataset Labels - ReadkonG 3. CL Methods Confident learning (CL) estimates the joint distribution between the (noisy) observed labels and the (true) latent labels. CL requires two inputs: (1) the out-of-sample predicted probabilities P̂k,i and (2) the vector of noisy labels ỹk . The two inputs are linked via index k for all xk ∈ X.

ACM Digital Library ACM Digital Library Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. PDF Confident Learning: Estimating Uncer tainty in Dataset Labels Confident Learning: Estimating Uncer tainty in Dataset Labels The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation Northcutt, Curtis, Jiang, Lu and Chuang, Isaac. 2021. "Confident Learning: Estimating Uncertainty in Dataset Labels." Journal of Artificial Intelligence ... 《Confident Learning: Estimating Uncertainty in Dataset Labels》论文讲解 噪音标签的出现带来了2个问题:一是怎么发现这些噪音数据;二是,当数据中有噪音时,怎么去学习得更好。. 我们从以数据为中心的角度去考虑这个问题,得出假设:问题的关键在于 如何精确、直接去特征化 数据集中noise标签的 不确定性 。. "confident learning ...

Figure 5 from Confident Learning: Estimating Uncertainty in Dataset ... Figure 5: Absolute difference of the true joint Qỹ,y∗ and the joint distribution estimated using confident learning Q̂ỹ,y∗ on CIFAR-10, for 20%, 40%, and 70% label noise, 20%, 40%, and 60% sparsity, for all pairs of classes in the joint distribution of label noise. - "Confident Learning: Estimating Uncertainty in Dataset Labels" Confident Learning: Estimating Uncertainty in Dataset Labels | AITopics Learning exists in the context of data, yet notions of $\textit{confidence}$ typically focus on model predictions, not label quality. Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. Uncertainty-informed deep learning models enable high-confidence ... Several techniques have been developed to estimate uncertainty from deep learning models. ... the CPTAC dataset, high-confidence predictions from UQ models ... with the synthetic image labels ...

![Paper Reading]Learning with Noisy Label-深度学习廉价落地- 知乎](https://pic2.zhimg.com/v2-80277171b5896eb794b5600dc526a8e5_b.jpg)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/26-Figure11-1.png)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/7-Figure2-1.png)

![R] Announcing Confident Learning: Finding and Learning with ...](https://preview.redd.it/c1l2tu875rw31.jpg?width=4000&format=pjpg&auto=webp&s=bcb6f5d9528e92501278aefa843093880f2ef024)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/2-Figure1-1.png)

Post a Comment for "41 confident learning estimating uncertainty in dataset labels"